Big data has been a popular term, in the market these days. In fact, it was not the term that is introduced in the past year or a few years before. But this term exists long back. When we go through this term, we often think that it is some thing weird. But, NO. Big data means nothing but data that is big, simply huge amounts of data (say thousands of Petabytes, or can be higher than that too).

Big data exists previously, but the term was now popularized. Take Google for example, we all know that Google contains a lot of information about web pages. And it has up to 20,00,000 servers (approx), which represents that it has very very very huge amount of data. Not just Google, but all companies will have large sets of data.

But what will you do with all the data?

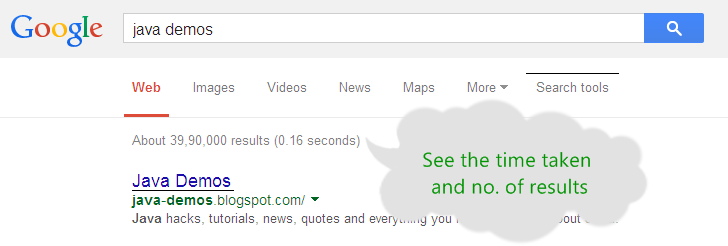

Data is for performing some operations on it and getting the output we need. For example, when you type something in Google, you see some millions of results and you can also see the no. of seconds that Google took to give you them.

You got about 39,90,000 results in 0.16 seconds. Isn't it cool? Isn't it something looking great? Because, processing those many records and getting the best results on the top of results with in a sub-second latency is cool.

This is what you are intended to know about the big data.

When data is big, processing it obviously takes more time. But to perform any no. of operations we need on the data within seconds is what is needed.

Now, try copying a 10 MB file from your F:\ drive to C:\ drive and see how much time it takes. At least, a few seconds? Yes. But how do you think that Google has processed Petabytes of data in 0.16 seconds? Tell me, Google has about 200 algorithms to filter the search results and all those 200 algorithms must process some petabytes (more than that, of course) of data. How do you think it is possible in .16 seconds? Can we do it too? Yes, absolutely, with the right tools!

What is Hadoop?

When people talk about Big data, they talk about Hadoop and less often about MapReduce. I am not going to tell the entire thing about Hadoop here, however i will give you a brief introduction of what it is.

Previously you have seen that petabytes of data is processed within .16 seconds. Now, when you have such huge amounts of data, you also need to delivery them in nearly same time. So, there must be a way for you to do this. Is writing simple programs, and making an efficient use of variables in program going to do it? No, it deals with software design. You need to think of a way to ensure that the work is done by our system in less time frame.

Now, you know that Google has lot of servers. Now, tell me if Google stores all the data in one server?

When you type something in Google, your query obviously goes into some server, but is that server going to contain all the data that you are intended to find out? Absolutely, No. Is that server going to do all your job?

Is it going to contain all the code that needs to process the data? No.

Say, for example, your query is transferred to another server? Is that server going to execute all the Google programs? No.

Even if it does, then tell me how much amount of RAM is needed for it to perform operations on petabytes of data? Tell me, how many processors are needed to process that huge amount of data in .16 seconds?

Tell me, what is the highest memory RAM that you have known? How many processors can fit in a single server?

You got the answer there in. The key here is that, one server is not going to do the entire job. But the job is splitted to multiple servers. When Google has to do a lot of operations, all that it does is to give each operation to a server and then finally combine the results of all those operations and give it to you. There by, it is not only making efficient use of resources but also producing results in faster way.

It doesn't matter how much amount of data you have, as long as you have more servers that need to process it, you always produce the results with in lesser time frame. If one server is going to do all of that, then it is going to take a lot of days, even with higher system configuration.

Apache Hadoop is a framework which lets us process the big data with less time. It contains an implementation of MapReduce and also hdfs (a filesystem that manages the data by distributing it among multiple servers). Learn more about hdfs here

Now, decide for yourself whether it is worth worrying about or not.

No comments:

Post a Comment